Yuuki

Code Generation

A multilingual code generation model trained on a Redmi 12 smartphone. Zero cloud. Zero budget. Built by one person with pure determination.

Yuuki-best

A code generation model based on GPT-2 (124M parameters), trained from scratch on a smartphone CPU. Currently at checkpoint 2000 with measurable improvements.

Training Details

Language Scores (CP-2000)

Average: 24.6/100 (+146% from checkpoint 1400)

Evolution

| Metric | CP-1400 | CP-2000 |

|---|---|---|

| Progress | 3.7% | 5.3% |

| Agda | 20 | 55 |

| C | 8 | 20 |

| Assembly | 2 | 15 |

| Avg Score | ~10 | 24.6 |

| Speed | 100s/step | 86s/step |

module Main where

open import Function

--

open import Data.Nat

open import Function

open import Data.Nat

open import Data.Unit

open import Data.Nat.Dec

open import Data.Properties.Nat

open import Data.Nat.Properties

open import Data.UnaryThe Complete Toolkit

From downloading models to chatting with them in your terminal. Everything built in Rust, optimized for every platform.

YUY

CLI ToolDownload, manage, and run Yuuki models locally. Three commands to get started. Written in Rust.

- Model downloads with auto-quantization

- Local inference via llama.cpp / ollama

- Cross-platform (Termux, Linux, macOS, Windows)

- System diagnostics & health checks

$ yuy setup && yuy download Yuuki-best && yuy run Yuuki-bestYUY-Chat

TUI ChatBeautiful terminal chat interface. Stream responses word by word. Save and reload conversations. All local.

- Real-time streaming responses

- Conversation history (JSON)

- Model selector & presets

- HuggingFace cloud integration

$ yuy-chatSmart Downloads

Auto-selects the best quantization based on your hardware and RAM.

Multiple Quantizations

q4_0, q5_k_m, q8_0, and f32. From phones to research workstations.

Mobile-First

Termux is the primary target. Optimized for constrained hardware.

Cross-Platform

Termux, Linux, macOS, and Windows. Same experience everywhere.

Zero Config

Platform detection, RAM recommendations, runtime auto-discovery.

~50ms Startup

Lightweight Rust binaries. ~8 MB. ~20 MB idle RAM.

Try Yuuki Now

Generate code directly in your browser. Click below to open the Yuuki Space on HuggingFace. No setup required.

The Space may take a moment to load if it has been idle. Powered by HuggingFace Spaces.

Progress is Real

Every metric is measurable and reproducible. Trained at zero cost with consistent improvements across checkpoints.

Foundation

- Training pipeline on mobile

- Custom tokenizer

- Checkpoint system

- Evaluation framework

Current

- Continued pre-training

- Language expansion

- YUY CLI tool (Rust)

- YUY-Chat TUI interface

Upcoming

- Yuuki v0.1 full release

- Research paper publication

- Native model (from scratch)

- Community model hub

Why This Matters

Accessibility

Students without GPU access can experiment with ML training. No cloud account, no credit card, no barriers.

Democratization

Proves that meaningful ML research can happen anywhere in the world, with just a phone and determination.

Edge ML

Explores the limits of what is possible with mobile hardware, pushing edge ML training into new territory.

News & Milestones

Follow the journey of Yuuki from concept to reality. Every step, every milestone, documented here.

Help, Yuuki Is Consuming Me

When you start training a model on your phone "just for fun" and suddenly it becomes your entire personality. Send help.

r/yuuki_omg Is Now Live on Reddit

The official Yuuki community subreddit is open at r/yuuki_omg. A Discord server is also in the works — just need to find the motivation to set up all the channels.

Learn more>

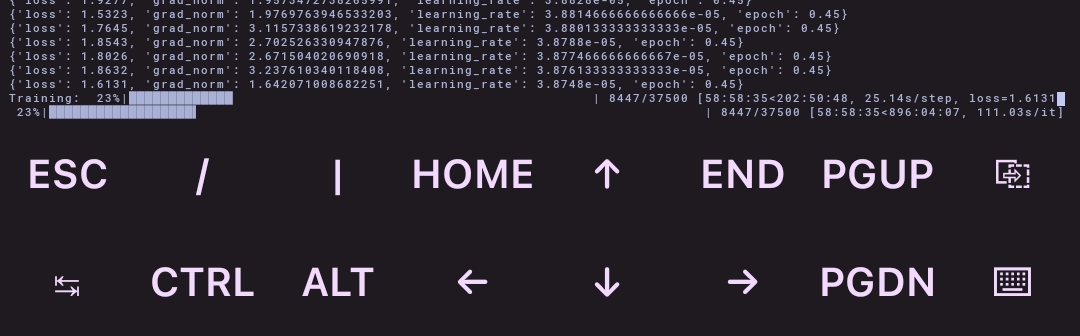

Training Surpasses 8,000 Steps

Yuuki has officially crossed 8,400+ training steps (23% of the way there) running entirely on a Redmi 12 via Termux. Loss is sitting at ~1.6 and steadily dropping. The grind never stops.

Learn more>Yuuki Research Paper in Progress

A research paper documenting the full Yuuki training methodology is currently being written. arXiv is giving us trouble with publishing, but a draft is available on Google Drive in the meantime.

Learn more>Yuuki Project Website Goes Live

The official Yuuki Project landing page is now live, featuring a live Gradio demo, the full model ecosystem, evaluation stats, and a donation section. Built with Next.js and Tailwind CSS.

Learn more>Training Begins on Redmi 12

Yuuki's training officially kicked off on a Snapdragon 685 with 4GB RAM. Using a custom GPT-2 architecture with rotary positional embeddings, the model trains entirely on-device with zero cloud budget.

Learn more>YUY CLI & YUY-Chat Released

The Rust-based CLI tool for downloading and managing GGUF models, and a full TUI chat interface with streaming, multi-turn conversations, and adaptive theming are now available.

Learn more>The Name "Yuuki" is Born

Inspired by the Japanese kanji for snow (Yuki) and the character Yuu from Girls' Last Tour, the project name Yuuki was chosen — carrying the quiet beauty of snow and the spirit of never giving up.

The Creator

agua_omg

Independent Developer & Creator of Yuuki

Hey, I'm agua -- a young independent developer. I started this project in January 2026 simply because I couldn't afford to keep paying for Claude anymore, so I decided to build my own open-source model for everyone. I'm open to collaborating with and supporting anyone who wants to try out or contribute to Yuuki.

Where does the name "Yuuki" come from?

It all started back in October 2025. I was working on another project and wanted to find a good name for it, so I looked into Japanese kanji and discovered Yuki -- meaning "snow."

Then in November 2025, I came across the anime Girls' Last Tour. The two protagonists are Yuu and Chi-chan, but I really fell in love with Yuu -- her personality is so unique and beautiful.

By December 2025, the two inspirations came together, and Yuuki was born -- a name that carries the quiet beauty of snow and the courageous spirit of a character who never gives up.

Support the Project

Yuuki is built with zero budget by a single person. Your support helps keep the project alive and growing -- better hardware, more training time, and new features.

Even a star on GitHub helps. Every contribution matters.